Mobilizing.js

Developed at EnsadLab, the research laboratory of the École Nationale des Arts Décoratifs in Paris, Mobilizing.js is a research project involving the creation of an authoring software environment for artists and designers. The objective is twofold:

- Promote the creation of interactive works on various forms of screen devices (computers, mobile screens, IoT, etc.).

- Provide a platform for artistic creation dedicated to collective interactivity in co-presence.

Web technologies have become versatile and, because they are based on standard and open specifications, we have chosen them to develop all the technical components of Mobilizing.js, which are themselves under a free license. Two main components make up the Mobilizing.js environment: a library and a platform.

The Library

The creation of interactive art on mobile screens (smartphones and tablets) is now facilitated by web technologies. The web browser has become a universal virtual machine, available on a wide variety of devices, and the potential for artistic creation and interaction design it offers is undeniable. There being many libraries for managing media (images, sounds, videos) such as Three.js, D3.js, P5.js, among others, the Mobilizing.js library focuses solely on one thing: making the embedded interaction peripherals of mobile screens accessible via a consistent API, as well as certain peripherals relevant to works of art and design (gamepads, cameras, microphones, etc.). Based on a modular logic, this library has been designed for different types of users:

- Creators with basic knowledge of web programming who wish to engage in interactive digital creation;

- Students involved in interactive artistic creation projects and interaction design;

- Developers looking to simplify their use of embedded sensors on mobile devices;

The Platform

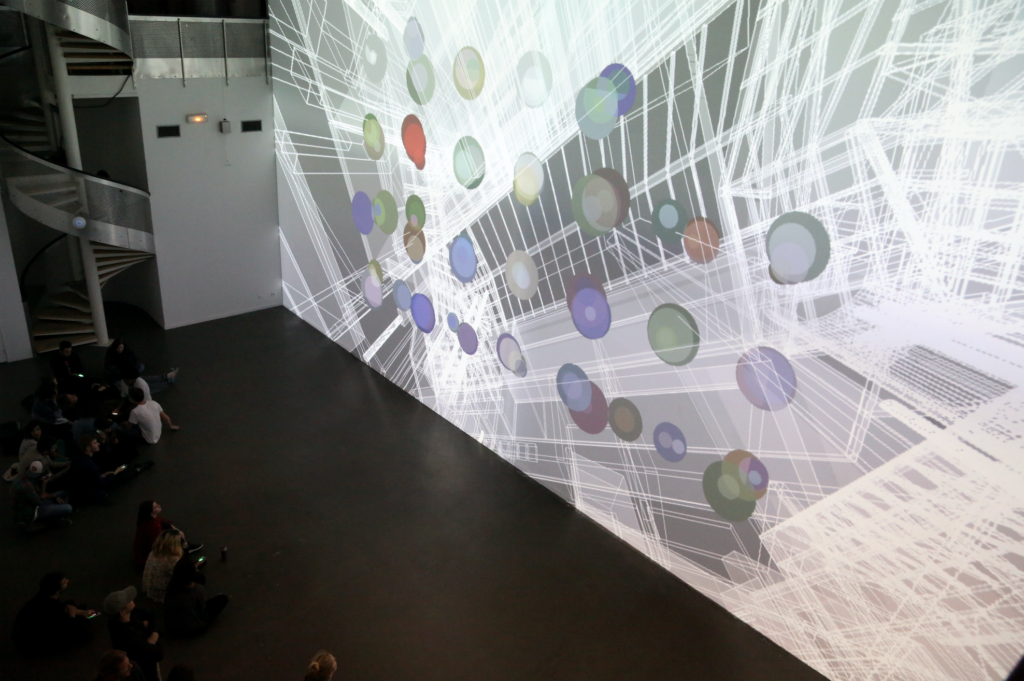

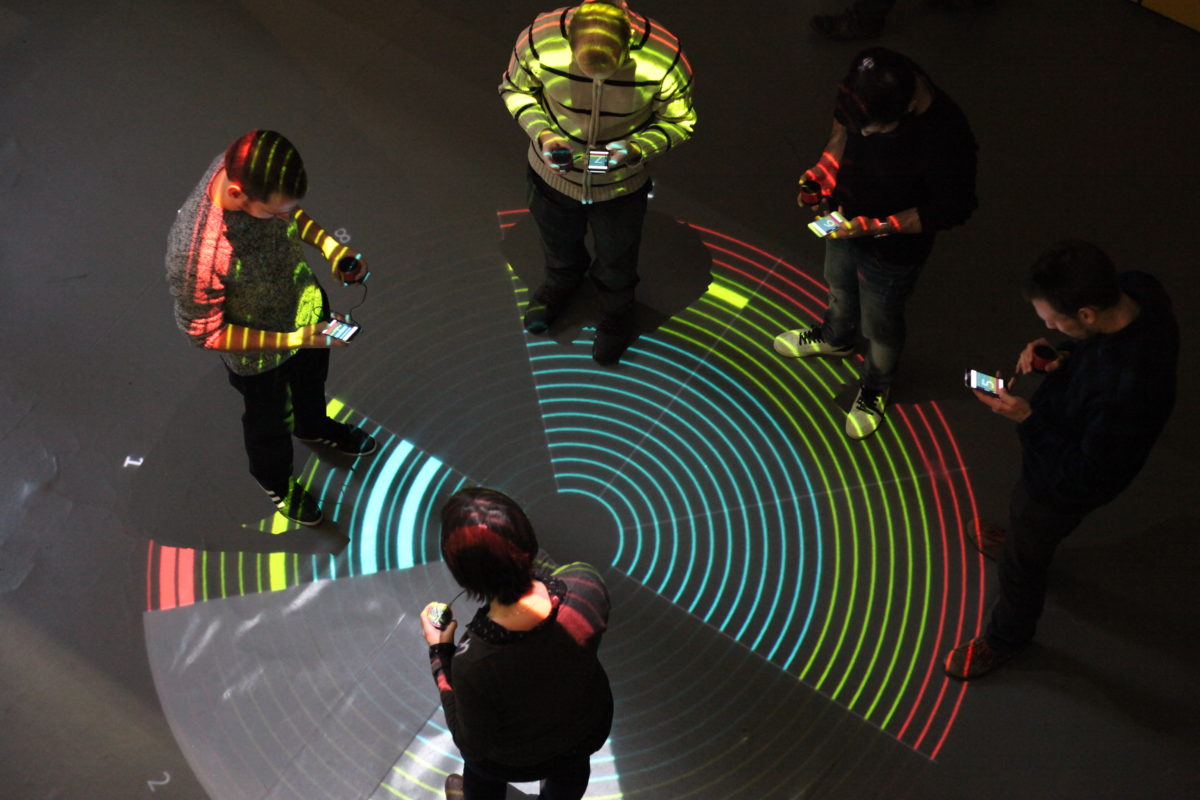

Co-situated collective interactivity can be explored on unprecedented scales thanks to mobile technologies: a group of people present in the same location can interact together on common objects, such as immersive images or a sound environment, using mobile screens. If interactivity in art consists of formalizing relationships between a viewer and a device to create a work, then collective interactivity creates a work by formalizing relationships between co-present participants and their environment. Collaboration, association, participation, cooperation, confrontation, competition, or even opposition are all figures of collective interactivity that can emerge or be proposed (or even imposed).

The Mobilizing.js platform is an extension of Soundworks, developed by IRCAM, a technical framework for developing collective interactivity devices. The main contribution of Mobilizing.js lies in the notion of sketch: within a single experience, multiple scenarios can be executed in parallel. This opens up the possibility of separating projects using common resources or even running multiple scenarios simultaneously. As a research-creation project, Mobilizing.js also explores the methodologies of writing and designing collective interactivity scenarios, as well as the hardware elements necessary for the proper functioning of the experiences.

Technical Information (version 1.0.1-alpha, 06/2025).

The Mobilizing.js library uses very few software dependencies. It is designed as an online "mono repo" type directory, containing several packages: core and helpers. Core brings together the main classes for using sensors under the io directory (DeviceMotion, DeviceOrientation, Pointers, GPS, etc.), and utils contains utility functions that simplify some frequent use cases with sensors (PermissionsManager, Cartography, etc.). Refer to the examples to learn more. Also consult the complete documentation of the available classes.

Mobilizing.js is compatible with the most common browsers, Safari, Firefox, and Chrome in their current versions, on desktop machines as well as on mobile screens. Some features are only available on certain devices (sensors on mobile screens, for example).

Example of minimal source code:

NB: This example uses the umd (Universal Module Definition) version of the library

index.html

<html>

<head>

<meta charset="utf8">

<link type="text/css" href="style.css" rel="stylesheet">

<script src="vendors/Mobilizing.umd.js"></script>

<script src="script.js" defer></script>

</head>

<body>

</body>

</html>

style.css

html, body {

margin: 0px;

font-family: monospace;

}

script.js

console.log(Mobilizing);

//make a shortcut to a specific class in Mobilizing

const Pointers = Mobilizing.io.Pointers;

//create an instance of a Pointers management object

const pointers = new Pointers();

//start it (this has to be done for every sensor type)

pointers.start();

let bodyInnerText = document.body.innerText;

//refer to the class documentation to learn all the available events and their arguments

pointers.on("pointerdown", (pointer) => {

writeEventToBody(pointer);

});

pointers.on("pointerup", (pointer) => {

writeEventToBody(pointer);

});

pointers.on("pointermove", (pointer) => {

writeEventToBody(pointer);

});

//shortcut function to write the events in the body

function writeEventToBody(pointer) {

const date = `${new Date().toLocaleString('en-US', { hour12: false })}`;

const newP = document.createElement("p");

newP.innerText = `${date}: ${pointer.pointerType}, id: ${pointer.pointerId}, x: ${pointer.clientX}, y: ${pointer.clientY}, event: ${pointer.srcEvent.type}\n`;

//simple auto-scroll "console effect"

window.scrollTo({ top: document.body.scrollHeight });

}

//NB: Sensors can be stopped with pointers.stop()

The result is visible below (hover the pointer in the frame!):

Schematic principle of sketches

Mobilizing.js Research, Development, and Design Team

- Project Leader: Dominique Cunin

- General Architecture: Dominique Cunin, Oussama Mubarak, Jonathan Tanant, Jean-Philippe Lambert

- Implementation of Fundamental Components: Dominique Cunin, Jonathan Tanant, Oussama Mubarak

- Refactoring 2019-2020: Jean-Philippe Lambert

- Refactoring 2022-2025: Oussama Mubarak and Dominique Cunin

- User Interface and Visual Identity: Alexandre Dechosal

The research and development for Mobilizing.js are conducted by EnsadLab (the laboratory of the École Nationale Supérieure des Arts Décoratifs) as part of the Reflective Interaction research program, under the direction of Samuel Bianchini, with the support of the French National Research Agency (ANR) for the Cosima research project (Collaborative Situated Media, 2014-2017), Orange in the context of the Surexposition project, the Chaire arts & sciences of the École Polytechnique, the École des Arts Décoratifs - PSL and the Daniel and Nina Carasso Foundation, PSL Valorisation, and the Permanent Commission for Franco-Québécois Cooperation (CPCFQ).

Achievements

Creation Residency at SAT - Montreal

Useful Fictions 4 - Lab 3

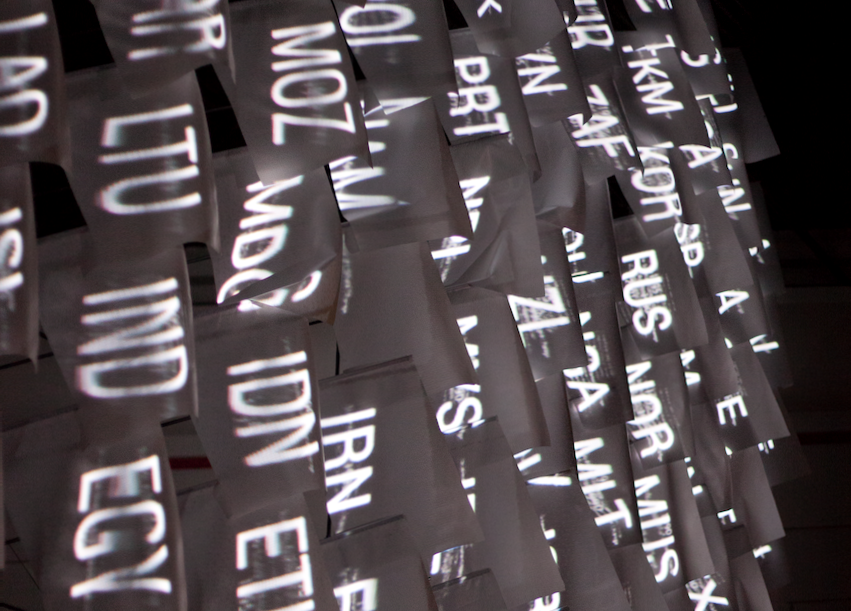

Shared Surveillance @Isea 2023

Collective Mobile Mapping: Espace puissance Espace

Visit the site: reflectiveinteraction.ensadlab.fr/espaceespace

Mobilisation

Visit the site: reflectiveinteraction.ensadlab.fr/mobilisation

Surexposition

Visit the site: reflectiveinteraction.ensadlab.fr/surexposition/

Collective Loops

Visit the links: reflectiveinteraction.ensadlab.fr/collective-loops